2016 Looking at People CVPR Challenge - Track 2: Accesories Classification

Evaluation metrics

Evaluation Criteria

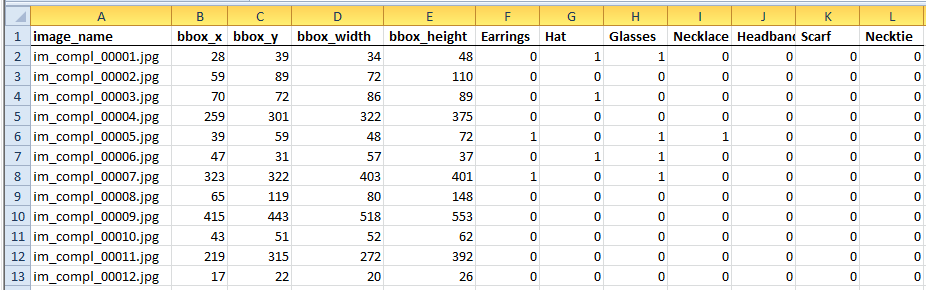

For the validation and testing process, the output from your system should be a vector with the following structure:

Where:

- 1st column: name of the image

- 2nd column: x coordinate of the bounding box around the detected face

- 3rd column: y coordinate of the bounding box around the detected face

- 4th column: width if the bounding box around the detected face

- 5th column: height if the bounding box around the detected face

- 6th to 12th columns: accessories in the image. Accessories present should be indicated with a 1. Accessories not present should be indicated with a 0.

This problem can be approached as a classification or regression problem, either way the results will be evaluated by calculating the mean square error between the predictions and the ground-truth data. This measure error will range between 0 (the face was correctly detected and all accessores have been correctly classified) and 1 (face has not been detected or classified accesories are no correct). Not predicted faces are evaluated with 1.

Submission format

In this track, the participants should submit a ZIP file containing their models with a file called README.txt with instructions on how to run the code. We will run your code with our testing data and then, we will calculate the mean error over the whole test set.

Each solution will be ranked in an ascending manner according to this error. The winner will be whoever gets the smaller error.

News

There are no news registered in