UPAR (WACV'23)

Dataset description

The challenge dataset used in the UPAR Challenge at WACV'23 is a subset of the existing UPAR dataset [1]. The challenge dataset consists of the harmonization of three public datasets (PA100K [2], PETA [3], and Market1501-Attributes [4]) with different characteristics and a private test set. 40 binary attributes have been unified between those for which additional annotations are provided. This dataset enables the investigation of attribute recognition and attribute-based person retrieval methods' generalization ability under different attribute distributions, viewpoints, varying illumination, and low resolutions.

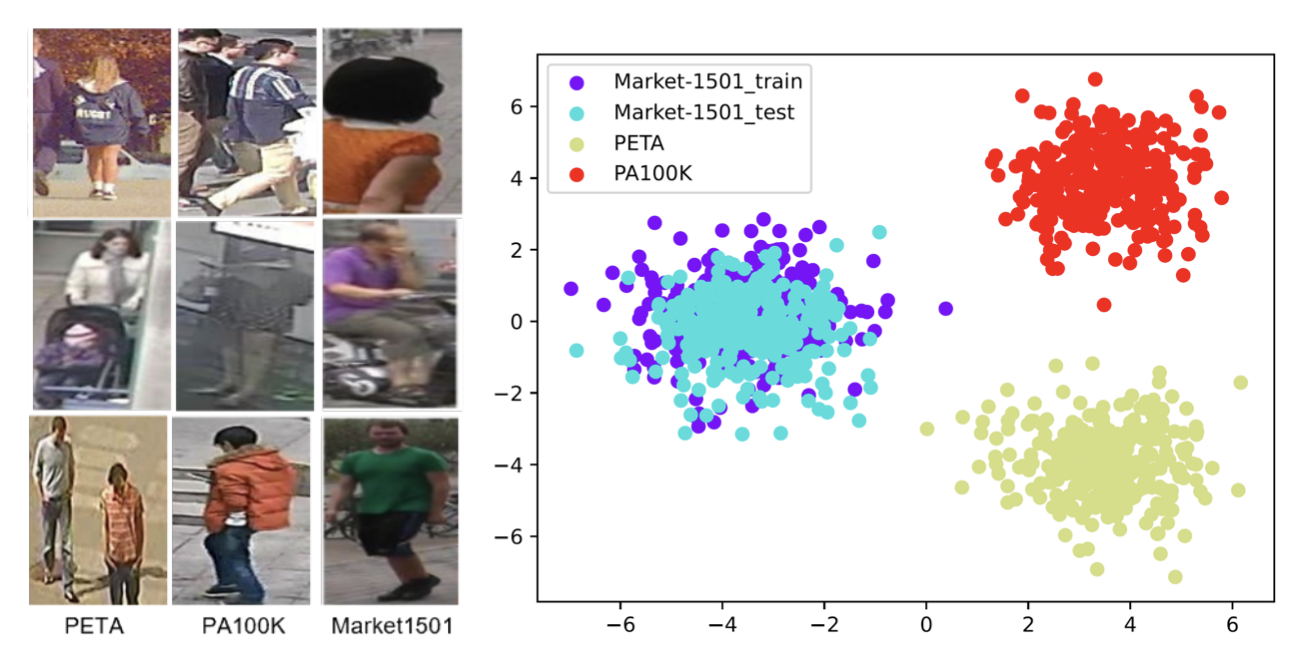

This challenge aims to spotlight the problem of domain gap in a real-world surveillance context and highlight the challenges and limitations of existing methods to provide a future research direction. As can be seen in Fig. 1, the datasets have different characteristics and the distributions of the data vary greatly, compared to different sets of the same dataset. Which is why this challenge applies a cross-domain evaluation scheme using three different splits to assess the generalization performance of methods.

Fig 1. Sample images from the sub-datasets and domain distribution.

The following table provides an overview of available attribute categories and associated binary attributes.

|

Category |

Binary Attributes |

|

Age |

"Age-Young", "Age-Adult", "Age-Old", |

|

Gender |

"Gender-Female", |

|

Hair-length |

"Hair-Length-Short", "Hair-Length-Long", "Hair-Length-Bald", |

|

UpperBody-Length |

"UpperBody-Length-Short", |

|

UpperBody-Color |

"UpperBody-Color-Black", "UpperBody-Color-Blue", "UpperBody-Color-Brown", "UpperBody-Color-Green", "UpperBody-Color-Grey", "UpperBody-Color-Orange", "UpperBody-Color-Pink", "UpperBody-Color-Purple", "UpperBody-Color-Red", "UpperBody-Color-White", "UpperBody-Color-Yellow", "UpperBody-Color-Mixture", "UpperBody-Color-Other", "UpperBody-Color-Unknown", |

|

LowerBody-Length |

"LowerBody-Length-Short", |

|

LowerBody-Color |

"LowerBody-Color-Black", "LowerBody-Color-Blue", "LowerBody-Color-Brown", "LowerBody-Color-Green", "LowerBody-Color-Grey", "LowerBody-Color-Orange", "LowerBody-Color-Pink", "LowerBody-Color-Purple", "LowerBody-Color-Red", "LowerBody-Color-White", "LowerBody-Color-Yellow", "LowerBody-Color-Mixture", "LowerBody-Color-Other", "LowerBody-Color-Unknown", |

|

LowerBody-Type |

"LowerBody-Type-Trousers&Shorts", "LowerBody-Type-Skirt&Dress", |

|

Accessory-Backpack |

"Accessory-Backpack", |

|

Accessory-Bag |

"Accessory-Bag", |

|

Accessory-Glasses |

"Accessory-Glasses-Normal", "Accessory-Glasses-Sun", |

|

Accessory-Hat |

"Accessory-Hat", |

Public Splits

The challenge uses a cross-validation evaluation protocol, i.e., there are three splits of training, validation, and test data. As the task is to develop models that generalize well to other domains, only data from one domain is used for training in each split. Evaluation is performed on image data originating from several domains. Since the models should be applicable to multiple unseen domains without any changes, it is not allowed to use different models/hyper-parameters/approaches/etc. for different sub-sets of evaluation data within the same split. The training data is identical for both tracks and training splits are defined as follows (see “train_<split ID>.csv”):

|

Split ID |

Training |

Evaluation |

|

0 |

Market1501 |

PA100K, PETA |

|

1 |

PA100K |

Market1501, PETA |

|

2 |

PETA |

Market1501, PA100K |

Only images specified in the train files can be used for training. The use of any other data is strictly prohibited and will be checked during code verification. There are some differences between the two tracks that are detailed in the following.

Track 1 PAR:

-

There is only one “val_all.csv/test.all.csv” file for all splits. Please extract predictions for all images specified in the file for each split. The evaluation script on the CodaLab server will automatically select the correct sub-set of images and predictions for evaluating the splits.

-

There are some attributes that do not have positive samples in the training data of a split. Such attributes are ignored during evaluation. Please note that it is nevertheless necessary to include predictions for those attributes in the prediction files to have a standardized format. We recommend setting the predictions to 0.

Track 2 Attribute-based Person Retrieval:

-

In contrast to Track 1, there are different query and gallery image files for the three splits. Please use the correct ones to create the submission files.

-

Attributes without training samples are ignored and matching persons are determined without these attributes.

Private Test Set

Since the challenge aims to investigate methods that generalize well on new and possibly unknown domains without re-training, calibration, or domain adaptation, we only provide little information about the private test set. The final challenge winners are selected based on the score achieved on the evaluation server and the performance on the private test set.

The private test set consists of images from two data sources and includes both indoor and outdoor images. Furthermore, image resolutions and camera views vary greatly and pose another challenge.

Statistics

The following table provides the number of training images per split and the number of attributes that are used for evaluation, i.e., attributes with at least one training sample.

|

Split ID |

# Training images |

# Attributes with positive sample |

|

0 |

10,000 |

35 |

|

1 |

79,001 |

40 |

|

2 |

8,668 |

39 |

Validation and public testing for Track 1 is done using 14,580 and 33,407 images, respectively. With respect to Track 2, the number of queries and gallery images can be found in the following table.

|

Split ID |

# Val queries |

# Val gallery images |

# Test queries |

# Test gallery images |

|

0 |

2,267 |

11,656 |

2,706 |

16,949 |

|

1 |

1,325 |

4,658 |

2,557 |

23,421 |

|

2 |

2,336 |

12,846 |

2,169 |

26,444 |

Links for Download and Challenge Instructions

As mentioned in the challenge description and challenge rules, both tracks have a fixed training set.

-

Track 1: Pedestrian Attribute Recognition: Train on predefined data and evaluate generalization properties.

-

Track 2: Attribute-based Person Retrieval: Train on predefined data and evaluate generalization properties.

By downloading the data using our starting kit, you agree with the Terms and Conditions of the Challenge and comply with the licenses provided for the use of the sub-datasets.

-

Starting kit: https://github.com/speckean/upar_challenge

-

Test data & validation annotations (new): available for download here (decryption keys will be released within our codalab competitions, "Participate" tab, according to our schedule)

Data Source Licenses

-

PA-100K Dataset [2]

https://github.com/xh-liu/HydraPlus-Net

License: CC-BY 4.0 license "Creative Commons — Attribution 4.0 International — CC BY 4.0".

-

PETA Dataset [3]

http://mmlab.ie.cuhk.edu.hk/projects/PETA.html

License: "This dataset is intended for research purposes only and as such cannot be used commercially. In addition, reference must be made to the aforementioned publications when this dataset is used in any academic and research reports."

-

Market1501-Attributes [4]

License: No license available.

[1] Specker, Andreas; Cormier, Mickael; Beyerer, Jürgen (2022): UPAR: Unified Pedestrian Attribute Recognition and Person Retrieval https://arxiv.org/abs/2209.02522

[2] Liu, Xihui; Zhao, Haiyu; Tian, Maoqing; Sheng, Lu; Shao, Jing; Yi, Shuai et al. (2017): HydraPlus-Net: Attentive Deep Features for Pedestrian Analysis. In: 2017 IEEE International Conference on Computer Vision. ICCV 2017 : proceedings : 22 - 29 October 2017, Venice, Italy. Unter Mitarbeit von Katsushi Ikeuchi. 2017 IEEE International Conference on Computer Vision (ICCV). Venice, 10/22/2017 - 10/29/2017. Institute of Electrical and Electronics Engineers. Piscataway, NJ: IEEE (IEEE Xplore Digital Library), S. 350–359.

[3] Deng, Yubin; Luo, Ping; Loy, Chen Change; Tang, Xiaoou (2014): Pedestrian Attribute Recognition At Far Distance. In: Kien A. Hua (Hg.): Proceedings of the 22nd ACM international conference on Multimedia. the ACM International Conference. Orlando, Florida, USA, 03.11.2014 - 07.11.2014. New York, NY: ACM, S. 789–792.

[4] Lin, Yutian; Zheng, Liang; Zheng, Zhedong; Wu, Yu; Hu, Zhilan; Yan, Chenggang; Yang, Yi (2019): Improving Person Re-identification by Attribute and Identity Learning. In: Pattern Recognition 95, S. 151–161. DOI: 10.1016/j.patcog.2019.06.006.

News

There are no news registered in UPAR (WACV'23)